MSSQL Server Error 833: Synthesis of Real-World Case Studies

In this article, we shall discuss “MSSQL Server Error 833: Synthesis of Real-World Case Studies”. MSSQL Server Error 833 is an informational warning indicating that a disk I/O request (read or write) has taken longer than 15 seconds to complete. This is known as “stalled I/O” and points to an issue with the underlying storage subsystem rather than SQL Server itself. Please, see Restore MSSQL Server on Azure VMs using Azure Backup, and how to Pull and Push Commvault Images to Azure Container Registry.

Note: Persistent 833 errors often lead to high PAGEIOLATCH wait times, blocking, application timeouts, and in extreme cases, the SQL Server instance may shut itself down if it cannot access critical files like

tempdb

SQL Server error 833

The SQL Server error 833 – “I/O requests taking longer than 15 seconds to complete” is a well-known error. But often misunderstood symptom of underlying infrastructure issues rather than a defect in SQL Server itself.

This error indicates excessive I/O latency at the storage, virtualization, or network layer and can have a significant impact on database performance, availability, and stability as previously discussed above.

Over the years, multiple real-world incidents involving this error have been collected across different customer environments. These cases span heterogeneous infrastructures and reveal how a wide range of factors such as storage configuration, virtualisation settings, backup operations, network anomalies, and firmware or code defects. All these can all converge to produce the same SQL Server error condition.

This guide presents a synthesis of the most representative scenarios encountered in production environments. Each case highlights a distinct root cause and the corresponding remediation. With the objective of providing practical insights for system engineers, database administrators, and infrastructure architects.

By correlating SQL Server symptoms with underlying platform behaviours. This article aims to support faster root cause analysis and more effective troubleshooting when faced with SQL Server error 833 in complex, virtualised, and storage-intensive environments. For more information, please see the following link.

Please, see [AZURE] Security Service Edge (SSE) and Microsoft Entra ID, and how to Install SQL Server Always On & Configure Veeam Plug‑in for SQL. Here is how to “Unable to connect to MSSQL Server after changing Server name“.

Troubleshooting I/O Request

Error that occurs on SQL Server with this format: ‘…I/O requests taking longer than 15 seconds to complete on file…’

Over the years, real cases involving the above error have been collected from different customers

CASE 1

Problem involving two virtual nodes in a cluster, pointing to raw device disks. A multipath balancing policy different from that indicated in IBM best practices for SVC was applied, namely ‘Fixed’ instead of ‘Round robin’.

CASE 2

Problem involving many hosts, caused by emptying the SVC cache, resolved with a code upgrade of all SVCs pointing to IBM storage.

CASE 3

Problem due to co-stop time. I.e. too many vCPUs configured on a single ESX host, working simultaneously. Co-stop time is the time it takes to align all vCPUs for simultaneous execution. If co-stop time increases, it can impact all VMs allocated to a single ESX host. The co-stop time anomaly was caused by a weekly schedule that ran antivirus scans on all VMs simultaneously.

CASE 4

There was a significant mitigation of the problem inherent in an MSSQL on a VM as soon as the VM was moved from a datastore where deduplication was active to one where deduplication was disabled. Further analysis suggests that the problem is specific to a particular type of storage: Dell Compellent SC5020F.

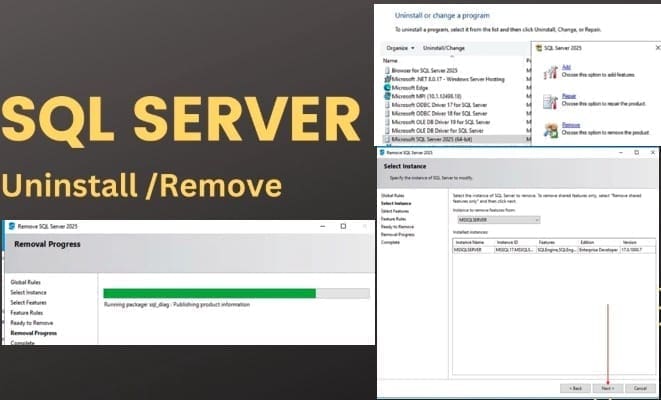

Please, see how to Uninstall Microsoft SQL Server 2025 from Windows, and “Editions of MSSQL Server: What are the differences between various Editions of Microsoft SQL Server“.

CASE 5

A network problem caused massive broadcast traffic, causing the storage controllers to crash. They first froze and then failed to restart when an attempt was made to reboot them. The storage controllers were manually forced to restart; after the restart, the latencies to the storage were abnormal. Dell support was engaged and, from the logs, identified errors, which were fixed by upgrading the firmware.

CASE 6

Problem related to the failure to consolidate a backup snapshot: this causes the machine to operate under sub-optimal file system conditions on the storage (since it works on the snapshot files instead of the consolidated virtual disk files). Problem solved by manual consolidation with the VM turned off.

CASE 7

Storage vMotion performed from a low-performance datastore (7k aggregate) to a higher-performance datastore (15k aggregate).

I hope you found this guide on “MSSQL Server Error 833: Synthesis of Real-World Case Studies” very useful. Please, feel free to leave a comment below.